Software Testing Mesmerization: Delving into DomJudge

Mar 28, 2020Preface

“Technology does not change who we are. It magnifies who we are. […] But lately, it seems, this industry is becoming better known for less noble innovation; the belief that you can claim credit without accepting responsibility. We see it every day now with every data breach, every privacy violation […] Too many seem to think that good intentions excuse away harmful outcomes, but whether you like it or not what you build and what you create define who you are. It feels a bit crazy that anyone should have to say this. But if you built a chaos factory, you can’t dodge responsibility for the chaos.” – Tim Cook, 2019 Stanford Commencement Address

We build software every day. But, oftentimes, in part, because we’re too proud of our own work, we think that our software is perfect, which is very unlikely. Indeed, it is extremely difficult and time-consuming to write flawless code and verify it; nor does the market seem to want it in general. Well, it’s okay to be not perfect as long as you don’t say so. But if you happen to write software that would cause serious ramifications on people’s lives, you should test it properly before its release. It’s your responsibility to ensure that your code doesn’t become a menace. Such literature of art is called: Software Testing.

I had two items to play around for this topic. One is from my hands-on experience with running an automated judging system for ICPC-style programming contests, as a contest chair in undergraduate (contestants up to 400). The other is from my lab’s striving for VPN access. The bug was related to an Intel NIC driver of Ubuntu’s stable releases. Although the latter case provides us with much more rigorous documentation and would be regarded as more ‘significant,’ here, I opt for the former case as I think it will provoke more multifaceted thinking in the perspective of Software Testing. Indeed, the entire judging system itself is a form of software testing. If interested in the latter case, please check this link for a brief summary and the fix.

Domjudge

Domjudge is an automated judging system for running programming contests. It provides interfaces for participants (called “teams” in the system) to submit their solution and receive the verdict, for juries to review the verdicts internally, for the administrator to coordinate logistics for contests [1]. Every submission is tested against a dataset stored in the server and its output is compared against the jury’s. In addition, its execution has to be within the designated time limit (e.g. 0.1-5 sec) and memory limit (e.g. 16MB-1GB); otherwise it is forcefully terminated. It does not reveal what test cases resulted in the verdict to the teams, but to the juries and the administrator for real-time inspection. It has been used in big live contests, including dozens of ICPC regionals and the World Finals and university contests. As of Mar 26, 2020, it received 393 stars and 8,215 commits and is in active development.

The remainder of this section explains the judging system and enumerates faults, errors, and failures that have been found in my firsthand experience.

Teams

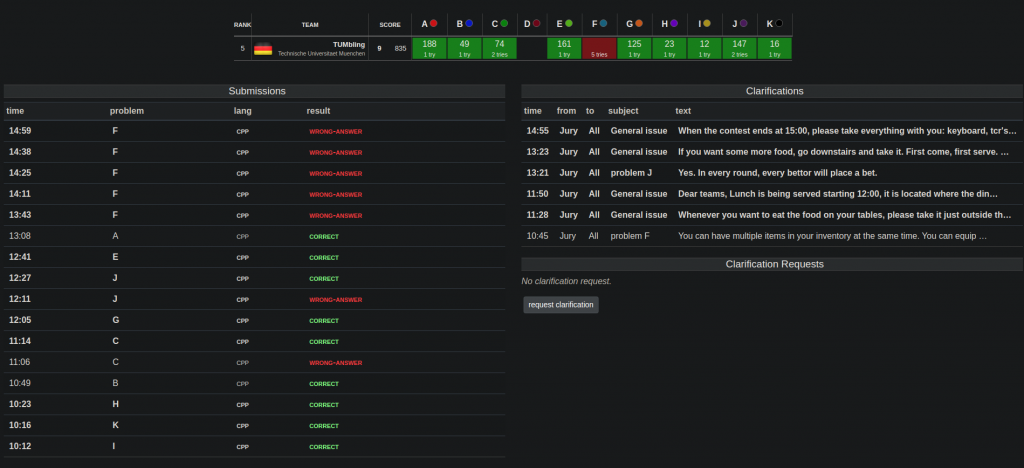

Team’s Submission View - https://www.domjudge.org/demoweb/team

Teams have the most restrictive view and control of the judging system. Their submissions are sent to the central database first and then to some (one for normal cases) of the “judging hosts” for execution. As it is assumed that their intent might be malicious or fooling (i.e. game the CPU time limit imposed by invoking yield() and do background job, and/or communicate outside of the system), the judging hosts are designed to prevent those from happening, vehemently.

Jury

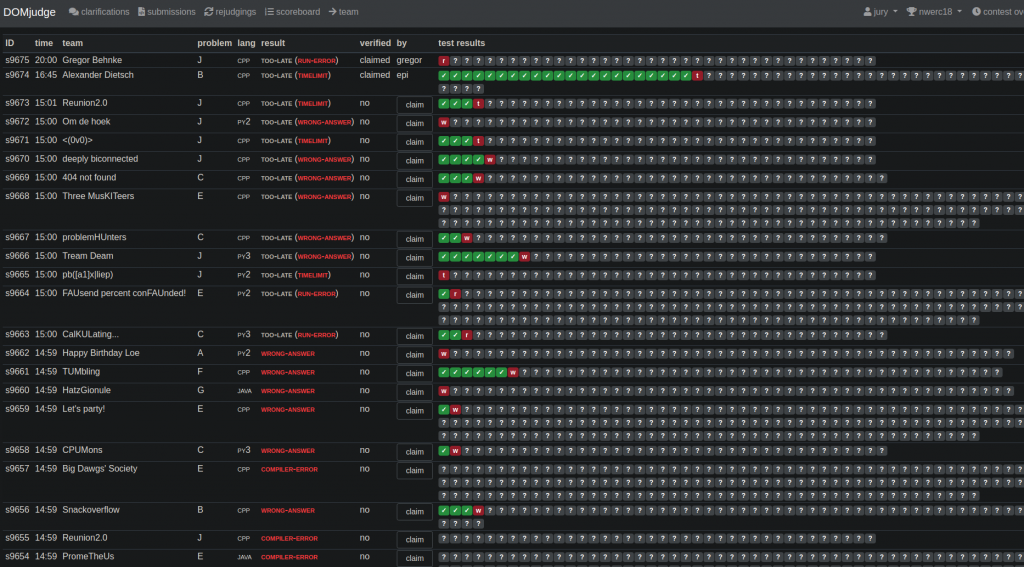

Jury’s Monitoring View - https://www.domjudge.org/demoweb/jury

The juries do the human check as the prepared testing may not be without any flaws. In most cases, there are only false positives—those that the testing could not rule out as not “CORRECT” in the absence of the edge case that reveals it, which is fine for a programming contest. However, there can be false negatives as well, which brings forth irrevocable consequences in a real-time programming contest. (The teams will spend time on the problem for which they were actually correct.) Ideally, we should strive to prepare a perfect problem set, but it’s not always possible due to the very nature of software testing. In those cases, it’s best to find out real-time by monitoring popping verdicts. Common mistake comes with whitespacing policy and floating-point (FP) precision.

As for the FP, the problem writers often think that their solution is perfect (which is the same kind of arrogance as many contestants'), and regard their computed FP values as true value, which aren’t. Their computation is also subject to FP computing error. When compared against those of the contestants, who may have invented other ways to calculate them, they may happen to be off the margin (typically, 10^-6 or 10^-9) from the jury’s. Hence, “wrong-answer”. Indeed, the margin should be set 2*x(or sqrt(x) if it somehow involves division) where x is the specified one (that the problem writer put in the problem description) or the computation should have done with a much more precise machine. The following is a mistake:

/* The three following functions may be compiled and executed in

separate machines. */

float jury_compute_pi_w_trigono_funcs(const unsigned int step=100) {

/* do the FP arithematic */

/* .. */

return make_believe_true_value

}

float team_compute_pi_w_trigono_funcs(const unsigned int step=100) {

/* do the FP arithematic */

/* .. */

return team_ans

}

bool judge(...) {

return abs(make_believe_true_value - my_answer) <= specified_margin;

}

A correct way is:

#define <math.h>

bool judge(...) {

// M_PI is a precomputed value in <math.h>

return abs(M_PI - team_ans) <= specified_margin;

}

Or,

{

/* .. */

return abs(judge_ans - team_ans) <= cablirated_margin

}

Fault

What is the fault here? The margin that is set in the system should have been twice the specified one. Here, if we regard the entire judging system as a software, the fault is the misconfigured margin that serves as a grace error between the team’s output and the jury’s. For example, if the error that is said to be allowed in the description is 10^-9, the margin should be set as 2 * 10^-9.

Error

The error, defined as an undesired state of the system, is a “wrong-answer” in the JUDGING table in the central database.

Failure

The failure, defined as the manifestation of the error, is manifest when either the team or juries query the value via the web interface. However, the failure may not be recognized at all, unless vigilant juries manage to detect the fault during the contest by getting a signal from an unexpected number of ‘wrong-answer’s for the problem or the team, if allowed, challenges the verdict afterward.

How to prevent

To the best of my knowledge, there is no “automated” way to detect this type of fault. It’s just an engineering mistake. The “test input” here should be a set of solution code from human programmers. To prevent this type of human mistake, problem writers do cross-validate their problems prior to the real contest by testing numerous solutions as if they were participating in a contest. But that is not to say that they can test every possible way in advance. On the other note, the DOMjudge may put some words as to how to derive or set an FP error margin.

Discussion

Doubling the margin does not result in a perfect testing either. It suppresses the aforementioned type of false negatives, however, it may give rise to false positive now. As a matter of fact, it is nearly impossible to draw an exact line for FP computation error. Since this was a programming contest, I opted for the lenient side of the line. If it were part of numerical computation of a supercomputer, one should opt for the strict side of the line to avoid compromising the entire computation.

Truth be told: some problem writers did not waive their copyright for the datasets, wary that this kind of incident might be revealed afterward.

This was rather a misconfiguration with the open-source project. Let’s now move on to see a documented fault in the source code of the project.

Administrator

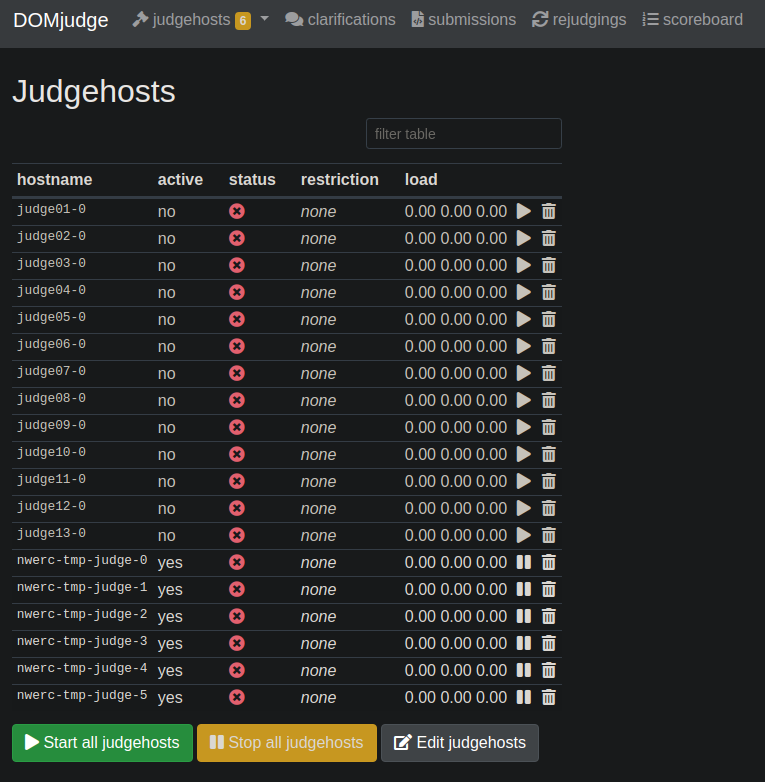

Administrator’s Judgehost Management Tab https://www.domjudge.org/demoweb/jury/judgehosts

The administrator can/should create multiple judging hosts (“judgehost[s]” hereafter) to execute and verify the submissions from the teams. Empirically, a minimum of 4-8 AWS EC2 t2.xlarge instances are required to accommodate a 100-teams-size-contest. The communications must be secured. A judgehost may die or stop responding due to various reasons, but the central server must be able to cope with all such possible scenarios. It has to not only maintain the consistency of the judging status, submissions, and contests but also proceed all judgings in a timely manner. Even if a submission is sent to a judging host under abnormal conditions, it should judiciously reschedule the execution in order to return the verdict to the team as soon as possible. It may sound simple but it is an extremely hard condition to meet or test as it involves concurrency. Here was the fault. Let’s think about the following scenario.

Scenario

- Judgehost A and B are running normally.

- Judgehost A executes a submission and gets its verdict, “wrong-answer’.

- However, the verdict is not sent to the central database, because it could not create a pipe for that as the disk was running out of space.

- The administrator is aware of the problem with judgehost A and stops it.

- The central server sends no more submissions to judgehost A.

- The central server instead orders judgehost B to execute the submission that was assigned to judgehost A. The verdict of judgehost B is “compile-error” as it could not finish the compiling, again, due to the lack of the disk space. Compilation aborted. After the object files are deleted from the disk, judgehost B can create a pipe, so it sends the verdict to the central database.

- The administrator enlarges the disk size on the AWS cloud and restarts the judgehost A.

- Judgehost A finishes the judgings and the results are sent to the database.

Then, on a jury’s screen,

shows https://*.*/submission.php?id=107 (IP masked):

| ID | start | max_runtime | judgehost | result | |

|---|---|---|---|---|---|

| j107 | 18:56 | 0 s | NULL | wrong-answer | |

| -> | j187 | 10:56 | s | ip---- | compiler-error |

error: domjudge query error(value SELECT result FROM judging WHERE submitid = 107 AND valid = 1): Query returned too many rows(2)To further inspect the state, I queried the same,

SELECT * FROM judging WHERE submitid = 107 AND valid = 1 | judgingid | submitid | starttime | endtime | judgehost | result | valid | output_compile |

|---|---|---|---|---|---|---|---|

| 107 | 107 | *704590.673028 | *704595.577489 | NULL | wrong-answer | 1 | [BLOB - 578 B] |

| 187 | 107 | *762175.915671 | *762176.436800 | ip-172-30-*-* | compile-error | 1 | [BLOB - 672 B] |

(trivial fields are omitted for brevity, and the IP masked)

The first table (or the set of rows in the DB’s term) seems similar to the second table, but we’re yet unsure if it is also a failure. It’s the state of the second table that prompts us the error message.

Error and Failure

In the second table, the submitidfield is the same but the judgingid, judgehost, and result are different. Both computing instances had the same error, i.e. the disk space starvation, if we regard the insufficient amount of disk space as an undesirable state. However, the failures were manifest differently—the first, the compilation succeeded but the creation of a pipe failed, hence a belated “wrong-answer” after disk size-up; the second, the compilation failed but the creation of pipe succeeded, hence an on-time “compiler-error”. Well, since the size of the disks were set the same, it was reasonable to see the disk space running out in proximate time.

Fault

What was the fault, then? If an isolated execution was part of design goals, the disk should have been properly virtualized. For instance, the judgehost should check the disk size prior to the execution. I created another issue for this—https://github.com/DOMjudge/domjudge/issues/278 .

The response was that “[i]n the master branch there is now a configuration setting [']Diskspace error[']. When that limit of free space is hit, the judgedaemon will abort and report this.” Yes. This is a way of preventing it. Maybe it should be turned on by default—Not turning on this functionality was a fault. The fix for it is referenced later.

How to prevent

In what way we could have prevented? Stress test. If we tested the judging environment in an exhaustive manner by automatic testing, i.e. pouring tons of submissions into the judgehosts, we could have faced the disk space starvation.

I created another issue—https://github.com/DOMjudge/domjudge/issues/277. The response was “[s]ee https://github.com/ubergeek42/domjudge-gatling for a way to stress[-]test a DOMjudge instance.” There was a resource available. It would have been easier to prevent this fault if a reference was provided appropriately.

If we do the stress test, the manifestation can be “wrong-answer” or “compiler-error”, or possibly else, depending on the remaining bytes on the disk and/or other concurrent executions if any. The human intervention (stopping judgehost A) can be simulated too but stopping the judgehost at the very event of low disk space seems to require the intuition of programmers and domain knowledge.

From the perspective of software engineering, it would be most desirable to aim at the complete isolation of the judgehosts. One would easily think of using existing PaaS (Platform as a Service)—Docker, Kubernetes, etc—as a solution.

Discussion

Okay, these are a fault and error of a subsystem. What are the fault and error of the entire system? I reported the failure for the entire system on Aug 27, 2017–https://github.com/DOMjudge/domjudge/issues/279–and received from one of the maintainers a response that the issue was already resolved on the development branch. Wait, there is no reference link directed to the fix and the issue thread became inactive. Alas, let’s dig out the dirt.

Observations

- The reported version was 5.1.2, and the fix had been already committed to a new development branch, which was not informed exactly, as of Aug 27, 2017. Let’s pick 6.0.0.

- The view seems to assume that there be at most one row that satisfies the WHERE statement, and the values of the

validfields are both set to 1. Indeed, the explanation part of the column says “[o]ld judging is marked as invalid when rejudging”. The first row should have been marked so. - The issue thread provides crucial information—the state of a database table. We can only look for a query update that touches the

validfield and its context!

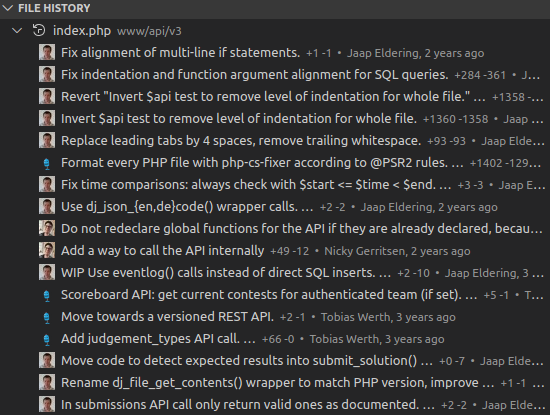

Traceback

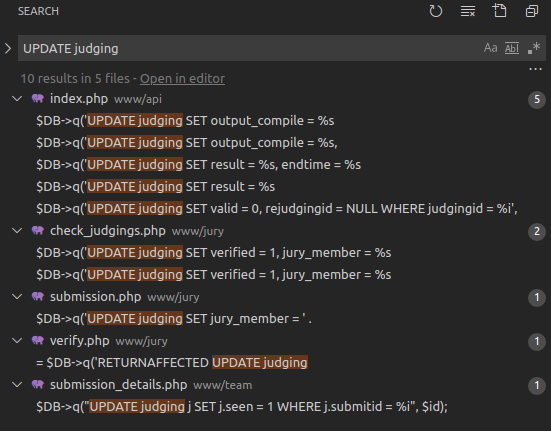

1. On the branch 5.1.2, Ctrl+F UPDATE judging :

2. The queries are executed conditionally. We have to see the context as well. For each file listed, go to the branch 6.0.0 and read carefully the commit logs.

Fix

After reviewing several commits, I could find the fix. It is on 5.2-dev(between 5.1.2 and 6.0.0), committed before Aug 27, 2017; but, the 5.2 was merged into the master thereafter. Hooray! Let’s take a look.

Post Internal Error / Disable Hudgedaemon on Low Disk Space

The commit can be found at de068b .

@@ -39,7 +39,9 @@

INSERT INTO `configuration` (`name`, `value`, `type`, `description`) VALUES

('diskspace_error_perc', '5', 'int', 'Minimum percentage of free disk space on judgehosts.'),

('diskspace_error_abs', '1048576', 'int', 'Minimum absolute free disk space (in kB) on judgehosts.');On the judgehost side, add configurations for disk space management in sql/mysql_db_defaultdata.sql. The minimum percentage and absolute size of free disk space are specified respectively.

@ judgedamon.main.php

while ( TRUE ) {

// Check for available disk space

$free_space = disk_free_space(JUDGEDIR);

$total_space = disk_total_space(JUDGEDIR);

$free_perc = (100.*$free_space) / $total_space;

$allowed_free_perc = dbconfig_get_rest('diskspace_error_perc');

$allowed_free_abs = dbconfig_get_rest('diskspace_error_abs'); // in kB

if ( $free_perc < $allowed_free_perc || $free_space < 1024*$allowed_free_abs ) {

$free_perc = sprintf("%01.2f%%", $free_perc);

$free_abs = sprintf("%01.2fGB", $free_space / (1024*1024*1024));

logmsg(LOG_ERR, "Low on disk space: $free_perc available, i.e. $free_abs");

$disabled = json_encode(array(

'kind' => 'judgehost',

'hostname' => $myhost));

$judgehostlog = read_judgehostlog();

$error_id = request('internal_error', 'POST',

'description=' . urlencode("low on disk space on $myhost") .

'&judgehostlog=' . urlencode(base64_encode($judgehostlog)) .

'&disabled=' . urlencode($disabled));

logmsg(LOG_ERR, "=> internal error " . $error_id);

}

//..//

judge($row);

}

@@ -1299,8 +1299,11 @@

function internal_error_POST($args)

if ( $disabled['kind'] == 'problem' ) {

give_back_judging($args['judgingid'], $submitid); // give back judging if we have to

$submitid = $DB->q('VALUE SELECT submitid FROM judging WHERE judgingid = %i', $args['judgingid']);

give_back_judging($args['judgingid'], $submitid);

}

On the side of the central server, it receives a notification from the judgehost via POST method that the judgehost has been down for low disk space. It then orchestrates to “give_back” judgings (potentially to the others, or the same judgehost can take over after its disk space is replenished and come back). As a result, it does not record the verdict to the database. Thus, no two valid verdicts.

How to prevent

On top of the discussion of the second issue, here, we can enforce integrity constraints to the table concerned, for example, there shall be at most one valid judging for a submission at any time.

Discussion

In this case, the faults lied not only on the server side but also within the subsystem. In order to prevent a fault of the subsystem from infecting the main system or vice versa, one should properly barricade the subsystem and the main system, with the abstraction layer of the subsystem or at least of the main system by constantly checking the integrity of the status. If performance matters, it can be in effect only in a debugging mode, with which we can do testing.

Summary

Throughout the post, we discussed:

1) FP computing error issue

- The fault was improper setting of a margin for the FP computing error

- The error was the false negative verdicts in the database

- The failure was an unexpected number of “wrong-answer” verdicts in the jury’s view, if noticed

2) Low disk space issue

- The fault was improper virtualization or isolation of the running environment with respect to disk space

- The error was a remaining space of the disk in the judgehosts

- The failure was manifest in the web view of the administrator (who could observe the stop of the judging progress) and of the jury (who could observe the query error message).

3) Two valid verdicts

- The fault was the misbehavior and mishandling of the subsystem (i.e. judgehost) in case of disk starvation.

- The error was the inconsistent database state which contained two ‘valid’ verdicts. There should be at most one for the criterion.

- The failure was manifest in the web view of the jury.

Note that there exists a causal relation between 2) and 3).